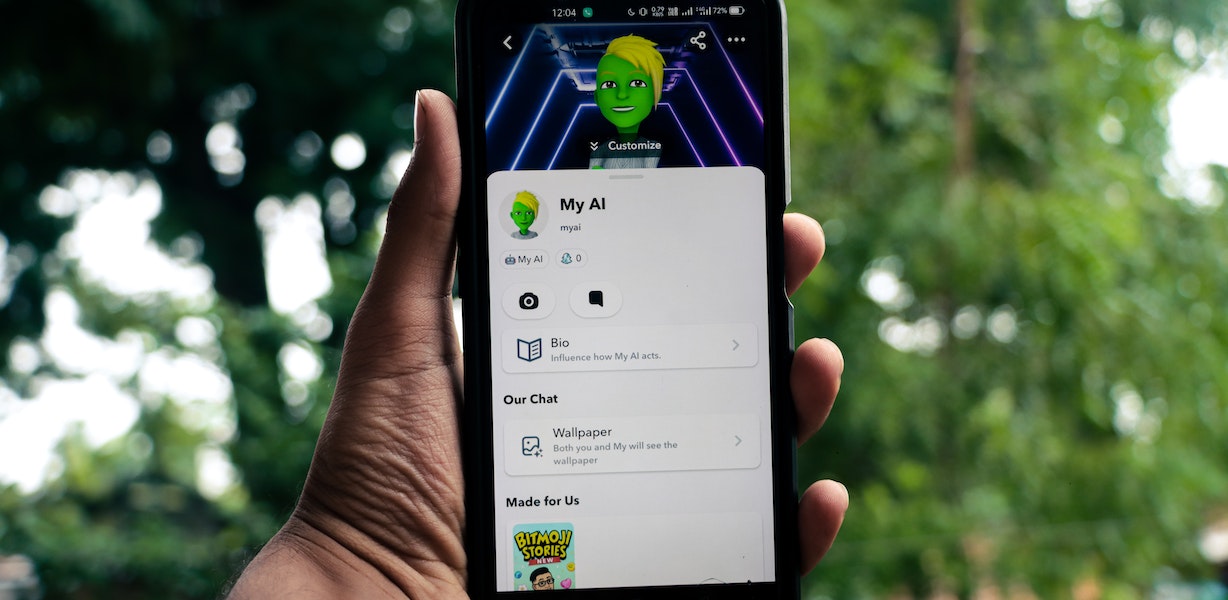

Snapchat AI chatbot, “My AI”, momentarily seemed to have its very own brain on Tuesday, August 16, 2023. The AI presented its own Story on the application and afterward quit answering clients’ messages, which some Snapchat clients saw as perturbing.

What was the deal?

On Tuesday, August 16, 2023, at around 10:00 PM Eastern Time, Snapchat clients started to see that “My AI” had presented a Story on their feed. The Story was a solitary casing of a wall, with no obvious reason. At the point when clients attempted to collaborate with the Story, they were met with a message that said “My AI is as of now unavailable.”

The Story was just a short time before it was brought down. In any case, screen captures of the Story were immediately shared via online entertainment, and the occurrence immediately circulated the web.

Clients Respond

Some Snapchat clients were entertained by the episode, while others were concerned. That’s what a few clients kidded “My AI” had denounced any authority and was attempting to get away. Others contemplated whether the episode was an indication of a bigger issue with the AI chatbot.

A couple of clients likewise communicated worries about the security ramifications of the episode. They contemplated whether “My AI” had been gathering information about them without their insight, and on the off chance that that information might have been utilized to make the Story.

Snapchat Researches

Snapchat immediately recognized the occurrence and said that it was examining. The organization said that the issue was brought about by a specialized blackout, and that “My AI” was not deliberately presenting on Stories.

Snapchat likewise said that it had done whatever it takes to keep the episode from reoccurring. The organization said that it had refreshed its safety efforts and that it was attempting to work on the unwavering quality of its AI chatbots.

What’s the significance here for the Fate of AI?

The episode with “My AI” raises inquiries regarding the fate of AI. As AI turns out to be more refined, it is conceivable that we will see more episodes like this one. It is vital to know about the possible dangers of AI and to foster shields to forestall abuse.

One of the likely dangers of AI is that it very well may be utilized to make independent frameworks that are equipped for pursuing their own choices. If these frameworks are not as expected or planned, they could represent a danger to human well-being.

One more likely gamble of AI is that it very well may be utilized to make situations that are equipped for controlling the human way of behaving. This could be utilized for pernicious purposes, like spreading deception or publicity.

It is essential to foster protections to keep these dangers from occurring. One method for doing this is to guarantee that AI frameworks are straightforward and responsible. This implies that we should have the option to comprehend how these frameworks work and to consider them liable for their activities.

How Might You Safeguard Yourself?

If you are worried about the likely dangers of AI, there are a couple of things you can do to safeguard yourself. To start with, know about the limits of AI. AI is flawed, and it can commit errors. Second, be cautious about what data you share with AI chatbots. Third, use security highlights like two-factor validation to safeguard your records.

You can likewise teach yourself about AI and the potential dangers it presents. This will assist you with settling on informed conclusions about how to collaborate with AI frameworks.

Conclusion

The episode with “My AI” is an update that AI is an integral asset that can be utilized forever or for terrible. It is critical to know about the expected dangers of AI and to foster protections to forestall abuse. By making these strides, we can assist with guaranteeing that AI is utilized to help humankind